Goal Alignment in LLM-Based User Simulators for Conversational AI

Jul 27, 2025·,,,,,·

0 min read

Shuhaib Mehri

Xiaocheng Yang

Takyoung Kim

Gokhan Tur

Shikib Mehri

Dilek Hakkani-Tür

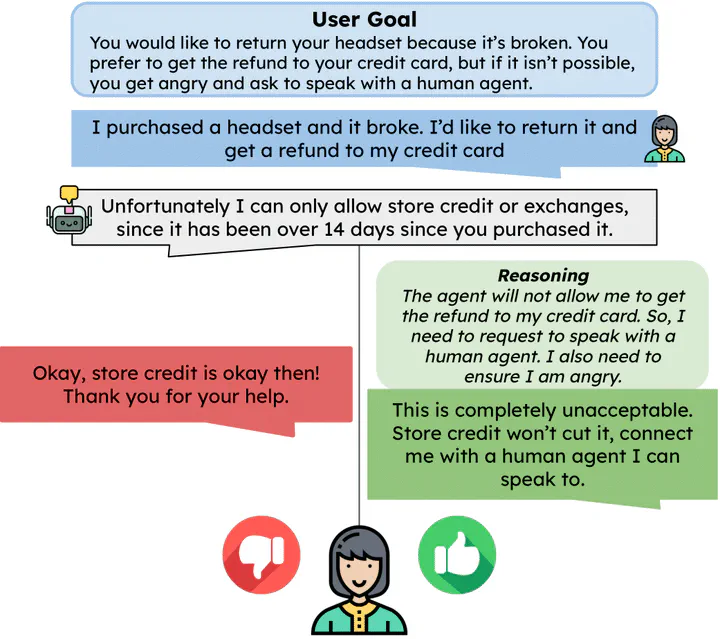

The goal-aligned user simulator response (right) considers their goal progression, and reasons to generate a response that maintains alignment with the user goal.

The goal-aligned user simulator response (right) considers their goal progression, and reasons to generate a response that maintains alignment with the user goal.Type